Rosen Diankov | Research and Projects

(roughly sorted starting from most recent)Integrating Planning and Vision for Reliable Manipulation

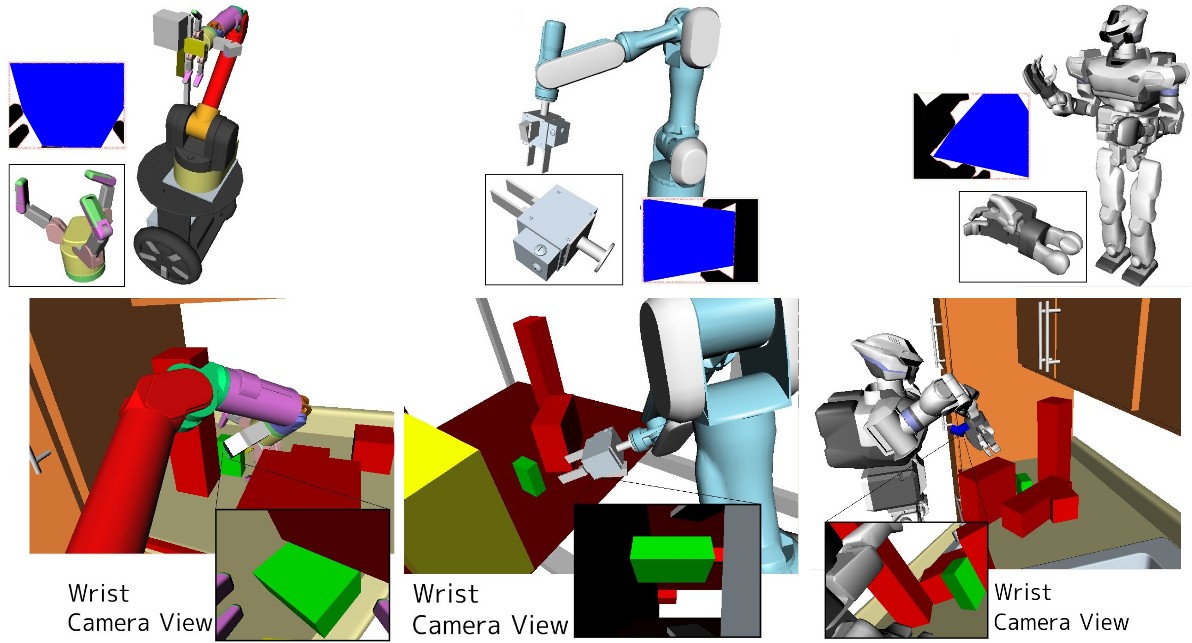

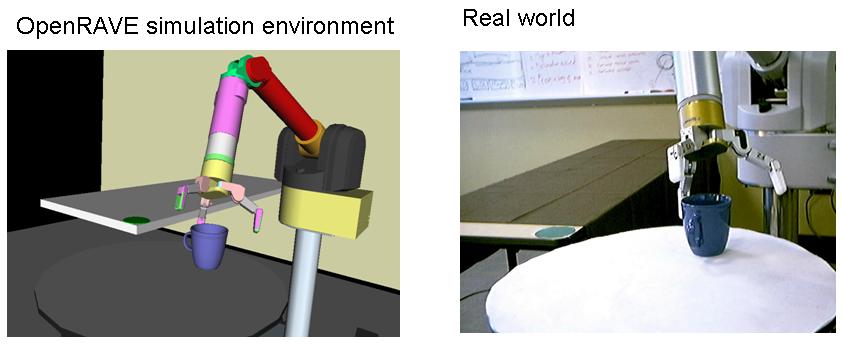

Recently we developed a framework that automatically analyzes the capabilities of camera sensors and uses this visibility information to increase success rate of autonomous manipulation. One of the features of the new framework is that at first we only consider moving a robot to get a better view of the object. Then during the visual feedback phase, constantly consider the grasp to use. One interesting phenomena that occurs is that we actually get faster overall planning times when considering visibility than when not.

ikfast - The Analytical Inverse Kinematics Generator

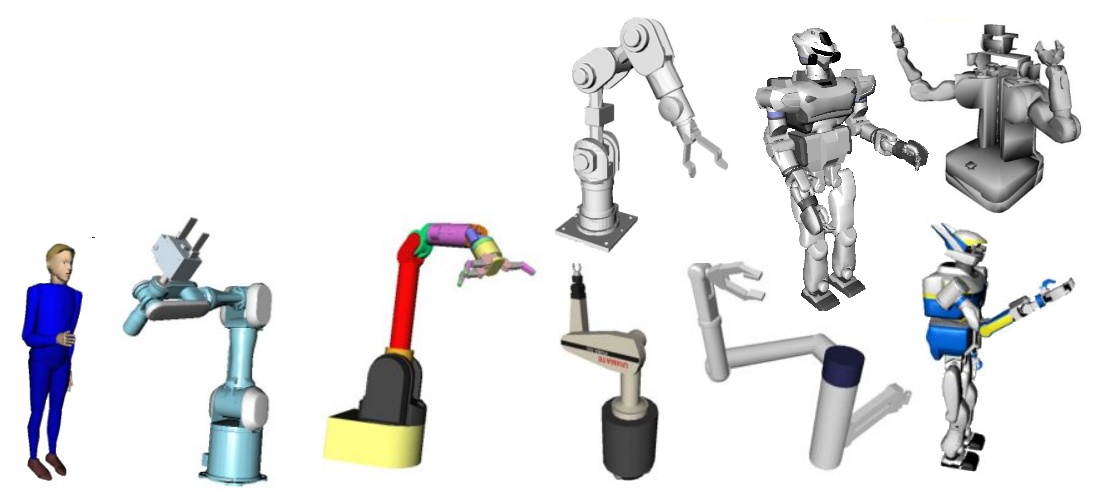

Every system that deals with manipulation planning for robotic arms benefits greatly from having closed-form inverse kinematic solutions for the arm. It is really difficult to actually use a robot without the equations since the search time can increase by more than 10x making any type of real-time movement impossible. Since my time at CMU, I have developed many analytical IK solvers that have allowed me to narrow down the process to a science. Using this knowledge, I have released an analyatical IK generator that can be used to generate a C++ file that solves the IK equations for most classes of robots (probably the world's first).

Given any set of joints for any robot, ikfast can automatically generate closed-form 6D/3D inverse kinematics solutions for those joints given the position of the end effector of one of the robot links. It is not trivial to create hand-optimized inverse kinematics solutions for arms that can capture all degenerate cases. IKFast automatically detects all divide by 0 situations and creates special branches to handle those cases. Whenever a certain variable can be solved in multiple ways, ikfast chooses the simplest, shortest, and most numerically-stable solution. IKfast returns all possible solutions, and can handle free parameters.

Some robots that whose kinematics can be solved using ikfast:

Willow Garage PR2 Robot

I had the honor of working at a great robotics startup during the last months of 2008. We were able to get OpenRAVE successfully running with their ROS system. You can find a video of the PR2 manipulating boxes here.

|

|

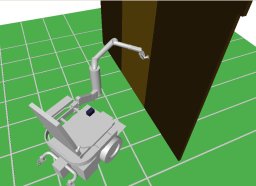

Autonomous Door Opening

Developed algorithms to get any robot to open any door using a new formulation called caging. One of the advantages of caging a door handle is that it greatly increases the robot's configuration space and makes execution less error prone since the hand is not firmly fixed to the handle. There are several movies of the WAM successfully performing this task. All this was done with OpenRAVE, you can find an explanation and source code of the algorithm in this wiki page.

| The first video is the WAM opening a cabinet to put a cup inside. |

|

| The second set of videos is the WAM opening a cupboard, closing a cupboard, and opening a refrigerator. Notice how the robot decides to change how it opens the door midway because it is considering the feasibility space. |

|

Personal Robotics Project at Intel

| I'm working on an arm to perform various tasks around the kitchen environment. Currently the main focus of our group is to get the arm to autonomously load and unload a dishwasher. Given an arbitrary placement of mugs, the arm can complete the entire grasp planning and executiong of putting a mug in the dishrack in about 30-40s. You can find several movies of this here and here. |

|

Personal Mobility Manipulation Assistant

For a short time, I worked on a project sponsored by the Quality of Life Technology Center to get a wheelchair to autonomously open a door. Although we're still far from a full finished system, our team has been able to get quite far in the project. You can see a preliminary video of the wheelchair in action here

|

|

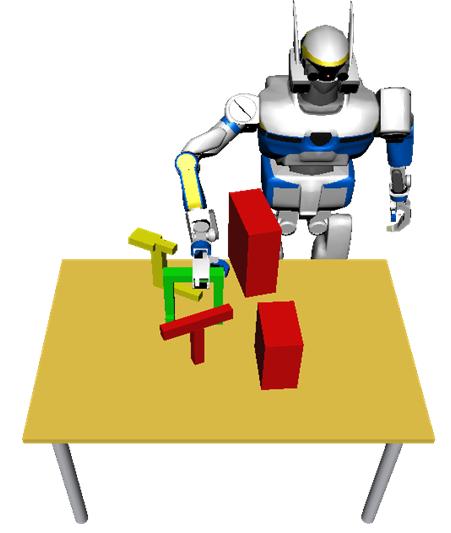

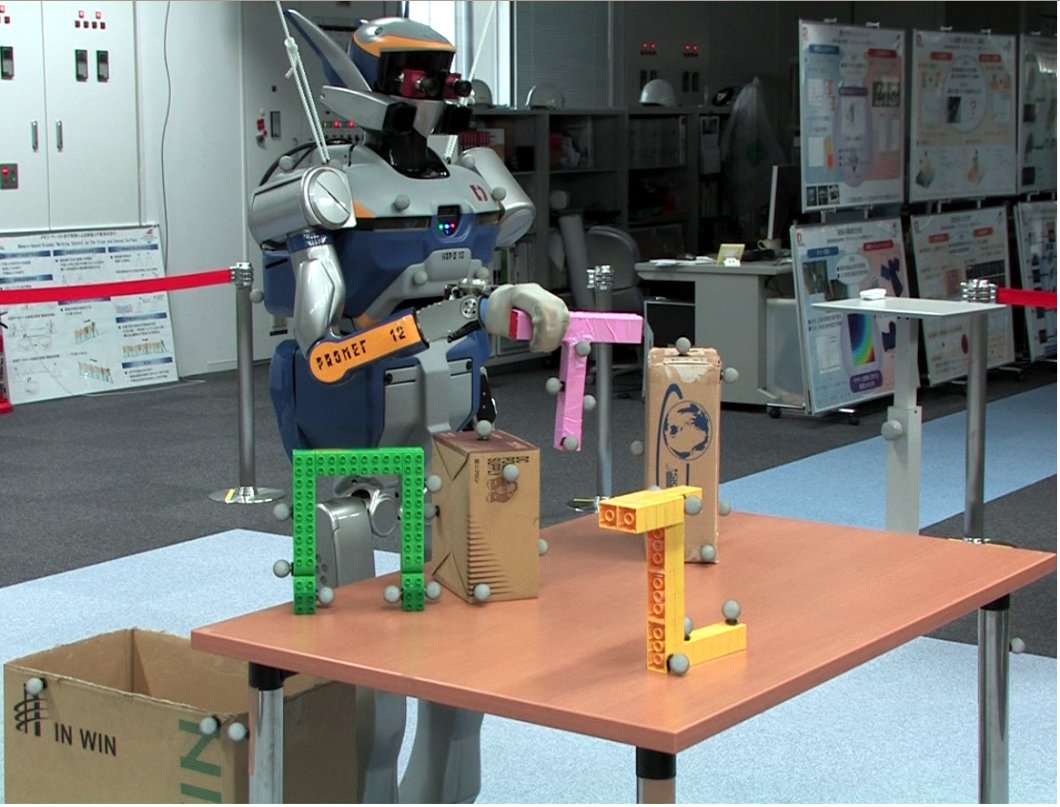

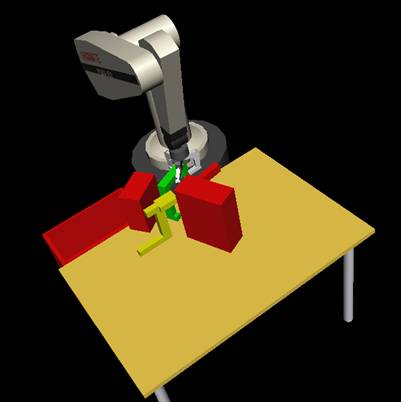

Grasping

I've been working on several projects involving grasp planning in complex and dynamic scenes. Grasping an object involves analyzing the target object and the robot hand for possible stable grasps. This work stands out because we consider the whole grasp planning task at once when choosing the grasps. We can quickly pick grasps such that any part of the robot is not in collision with surrounding objects, all kinematic capabilities are satisfied, and grasps are still feasible even at the goal. We've implemented this framework on the HRP2 and BarrettWAM robots. This research is done jointly with Dmitry Berenson. Download the paper explaining the method here and a short video here.

|

|

|

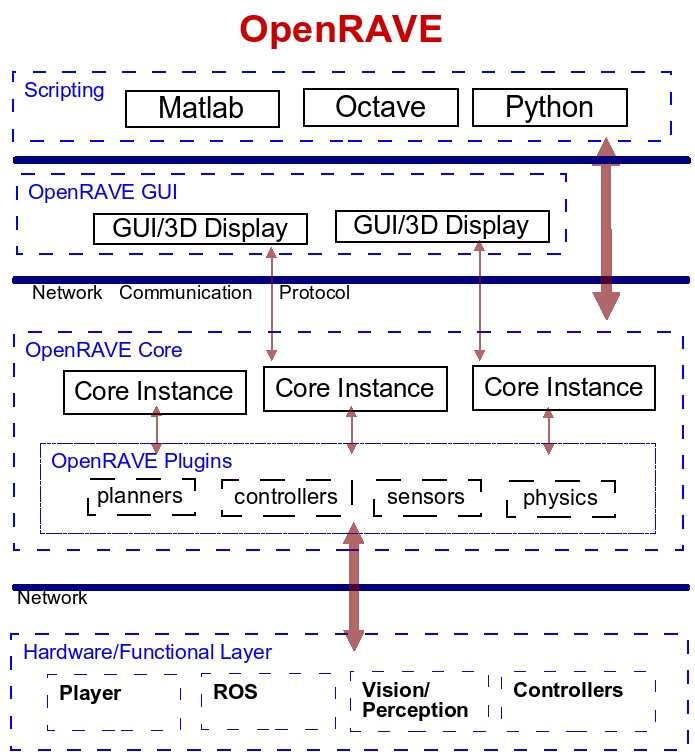

OpenRAVE

| Planning in complex scenes and managing all robot information is becoming more and more critical for today's robotic applications. I'm developing a new open-source robot planning architecture called OpenRAVE that servers as the center of all high-level processing the robot has to perform in order to complete its task. Users of OpenRAVE only have to concentrate on the planning and scripting aspect of their task without having to worry about the complex details of collision detections, robot kinematics, dynamic world updates, robot controls, and scripting environments. The architecture is plug-in based so that any planner, robot controller, and robot can be dynamically loaded without having to recompile the core code. This can enable the robotics community to easily share and compare algorithms. OpenRAVE also supports a powerful network scripting environment which makes it easy to control robots and change execution flow during runtime of the task all using a program like Matlab. |

|

Go to the OpenRAVE Wiki for installation and usage documentation. The package is publically available under the Lesser GPL.

Go to the OpenRAVE Wiki for installation and usage documentation. The package is publically available under the Lesser GPL.

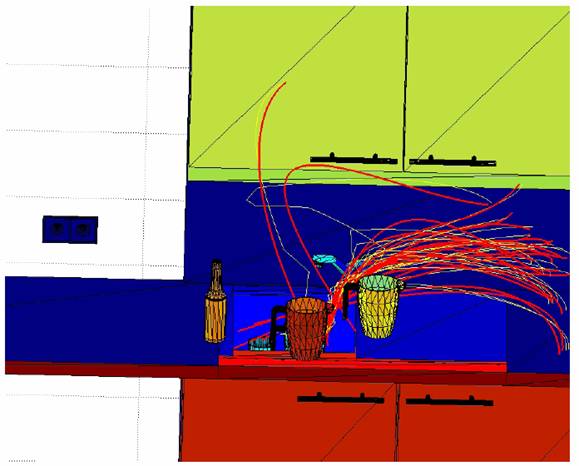

Statistical Path Planning

I'm very interested in using machine learning techniques to help create better planners and heuristics. There are some fundamental questions associated with this work:- How much can a robot autonomously learn by itself from past failures without any domain knowledge from humans?

- What features should the robot extract from the environment so that it can recognize patterns?

Choromet

| I worked for a while on a small 20 degree of freedom humanoid robot called Choromet made by GeneralRobotix. |

|

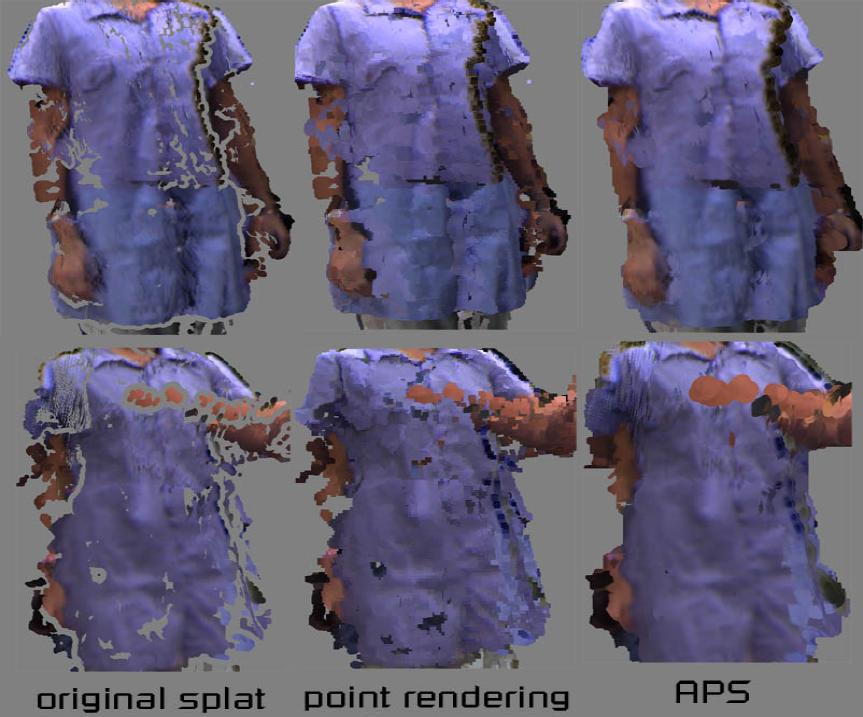

Teleimmersion

I used to work for 2 years at the Teleimmersion Lab at UC Berkeley where I developed various algorithms on calibration, computer vision tracking, and high performance graphics rendering with GPUs. Our lab was able to combine two dancers from remote locations in the same virtual space by capturing their 3D point data in real-time with 48+ cameras. My contribution was a fast way to render point clouds with GPUs while filling holes and getting rid of outliers called Adaptive Point Splatting.

Expression Cloning

Developed an algorithm to transfer expressions from one face to another. You can find more information about the algorithm in this paper.

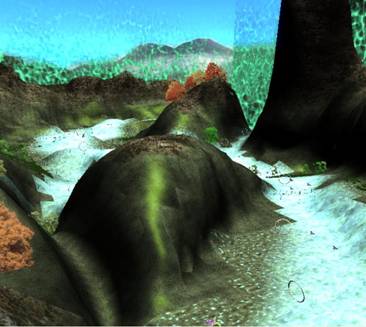

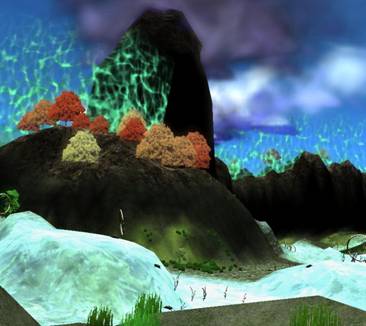

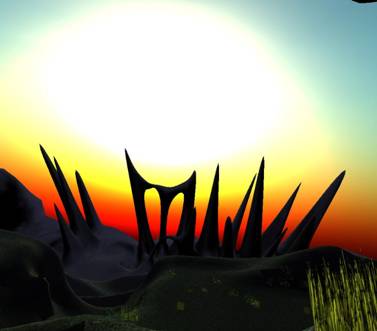

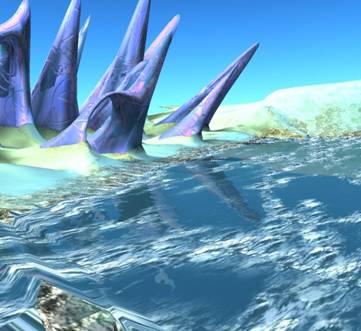

Game Programming

I've been programming 3D graphics before entering high school. Up until graduating from UC Berkeley, I've worked on many 3D games and graphics demos. One of my most notable achievements is dynamic compilation of GPU shader code to fit the current scene. Basically, instead of having thousands of preprocessed shaders, the 3D engine I created dynamically writes the shader code depending on the requirements of the object to be rendered. Below are some of my projects:ShadowFrameIGF

Submitted a game of the ShadowFrame engine to the Independent Games Festival 2005. You can download the demo here. Note that you'll probably need a graphics card with Pixel Shader 2.0+ support.

ShadowFrameIC

Imagine Cup 2005 Rendering Competition (semifinalist).

The purposes of the demo is to show off a nice amalgamate of computer vision and computer graphics. The ShadowFrame demo uses Intel's OpenCV computer vision library for detecting the face, and then uses this motion to control the camera. ShadowFrame uses a number of effects supported by a very powerful engine made with DirectX 9. The engine also builds all shaders dynamically to accommodate the different requirements of every object. These include: high dynamic range lighting, Reflections, Atmospheric effects, background scene complexity, etc.

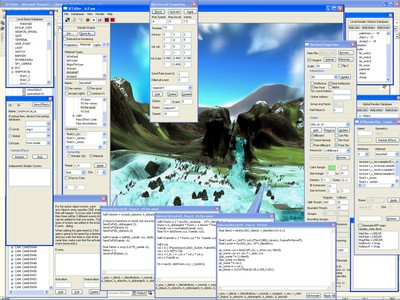

Here is the editor that created all this:

You can download the 150Mb demo here along with the editor. Note: If you don't have the Intel Integrated Performance Primitives Library installed, that you won't be able to run face tracking an message box will popup).

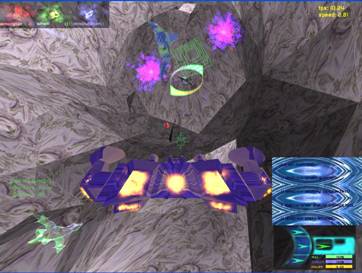

ShadowFire 2003

I started programming this large 3D space simulation game around April 2001. The basic concept was that a ship would fly around a huge space station pick up items, solve puzzles, and destroy enemies that could come from anywhere. This means that on top of the physics and event systems necessary for most space simulation games, there needed to be very robust rendering and collision detection systems that subdivided the scene for maximum optimization. Since I was working with a team of artists and designers, the most important part was to design the 3D engine specifications, all the data formats and databases, and get the game editors up and running as quickly as possible. Here is a small feature list of the final product:- Databases - I created a huge database system to manage 3D object related files: textures, objects, animations, materials, etc. The main idea was that any object that was displayed would only use an instance of the database data (which was loaded to video memory depending on how many objects were currently referencing it)

- Tools - Combined all the small editors into one big huge integrated editor. In the editor, you can choose to open any one type of database at a time and work only on it, or open many at the same time. This enabled different users to work on different parts of the game without interfering with the data.

- 3D Engine - The rendering engine is extremely fast and flexible. What the game engine does is it optimizes and builds unique shaders dynamically depending on the current scene. On top of that, the engine detects whether the object needs bump mapping or reflections, and automatically searches for the fastest path to render all the features.

- Collision Detection/Physics - The collision engine is extremely accurate and physics is taken into account. Any flying object has its own momentum and mass (even weapons).

- Artificial Intelligence - ships are designed to think about themselves and about their teammates. The AI model is divided into 3 levels: motor skills (going from point A to B without hitting anything), action execution (attack/defend/evade), and cognitive thinking (choosing the next best course of action).

3D Engine

This is a 3D engine I developed in 2000. You can download the exe and source code here (under the GPL licence). The engine is made for Win32 and supports- Clipping

- z buffers

- gouraud shading

- flat shading

- wireframe rendering.

- Simple raytracing so the mouse can grab the polygon and rotate it.